Higher Level Spacecraft Autonomy

Spacecraft

autonomy has long been regarded as a holy grail of spacecraft guidance, navigation, and control research. Decades of development have yielded few fully autonomous spacecraft; instead, mission planners and operators increasingly rely on automated planning tools to inform and support high-level decision making, in part due to the ability of human operators to act under environmental uncertainty, changing or competing mission objectives, and hardware failure. Advances in the field of artificial intelligence and machine learning techniques present one avenue in which these problems can be addressed without bringing humans into the loop. This work aims to extend the state-of-the-art in spacecraft autonomy by applying contemporary machine learning techniques to the high-level mission planning problem.

autonomy has long been regarded as a holy grail of spacecraft guidance, navigation, and control research. Decades of development have yielded few fully autonomous spacecraft; instead, mission planners and operators increasingly rely on automated planning tools to inform and support high-level decision making, in part due to the ability of human operators to act under environmental uncertainty, changing or competing mission objectives, and hardware failure. Advances in the field of artificial intelligence and machine learning techniques present one avenue in which these problems can be addressed without bringing humans into the loop. This work aims to extend the state-of-the-art in spacecraft autonomy by applying contemporary machine learning techniques to the high-level mission planning problem.

At present, examples of spacecraft autonomy typically fall into two categories: rule-based autonomy and optimization-based autonomy. Rule-based autonomy treats a spacecraft as a state machine consisting of a set of mode behaviors and defined transitions between modes. Pioneered by missions like Deep Impact, and currently used by missions such as the PlanetLabs constellation, spacecraft using rule-based autonomy transition between operational and health-keeping modes (charging, momentum-exchange device desaturation) autonomously without ground contact. Typically, the design of these autonomous mode sequences is pre-defined or based on pre-launch criteria. Rule-based approaches are attractive from an implementation perspective, as they require little computing power and can be tested to validate their rigid transition criteria. Nevertheless, these rigid rule-sets are brittle to changes in mission parameters, such as hardware failures or new science objectives. They also require accurate understanding of the mission environment in order to prepare for unwanted behavior. Additionally, rule-based approaches do not readily support the integration of multiple competing mission objectives, and require that those trades be made on the ground with humans in the loop before mission sequences are uploaded.

In contrast to rule-based approaches are a class of tools that use models of spacecraft behavior and hardware to generate mission plans on the ground while considering mission objectives, which this work broadly describes as optimization-based autonomy. Within this class of algorithms, the spacecraft and its mission are viewed in the framework of constrained optimization, with the spacecraft's hardware and trajectory acting as constraints and metrics of mission return — images taken, communication link uptime, or other criteria — are the values being optimized. In contrast to rule-based autonomy, optimization-based autonomy typically requires large amounts of computing power that precludes their use on-board. This method also requires realistic models, and well developed testing environments. Examples of this work include the Applied Physics Laboratory's SciBox software library (used to generate MESSENGER mode sequences) and the ASPEN mission planning suite developed by the Jet Propulsion Laboratory and applied to the Earth Observing-1 mission.

As both rule- and optimization-based autonomy techniques become more mature, the search for next-generation spacecraft autonomy approaches has begun. As with contemporary approaches, emerging techniques in autonomy should reduce mission development and operational cost while improving mission returns. New techniques should also improve upon autonomy runtime, ability to deal with uncertainty, ability to learn from past experiences, and the ability to make mission-level decisions. The need for adaptability strongly suggests the use of machine learning (ML) techniques as a core of autonomous decision software.

Recent advances in machine learning may hold the key to these next-generation approaches for spacecraft autonomy and on-board decision-making, as they allow agents, by definition, to improve their behaviors as they gain experience. Contemporary reinforcement learning approaches, for example, do not require knowledge of system models and scale relatively well to large problems with multiple constraints or non-convex reward functions. This work aims to explore the applications and frameworks necessary to apply deep reinforcement learning to the spacecraft decision-making problem.

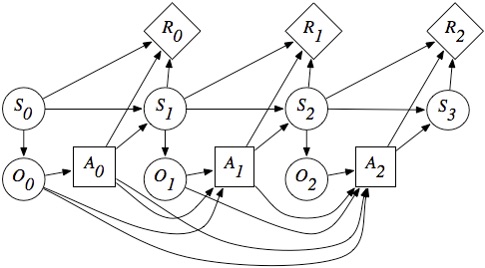

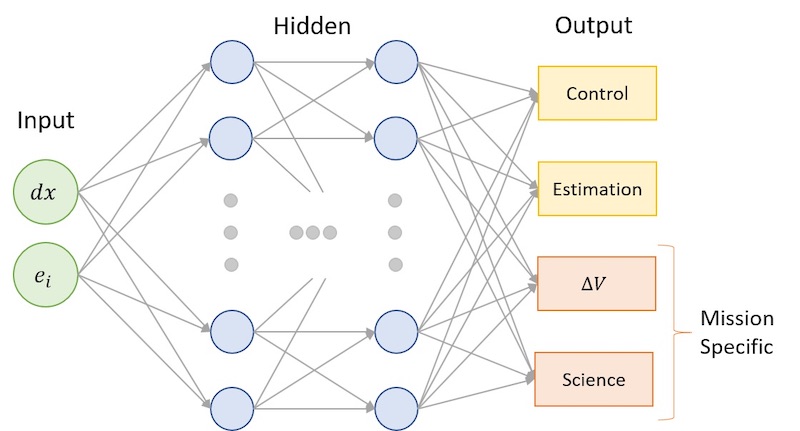

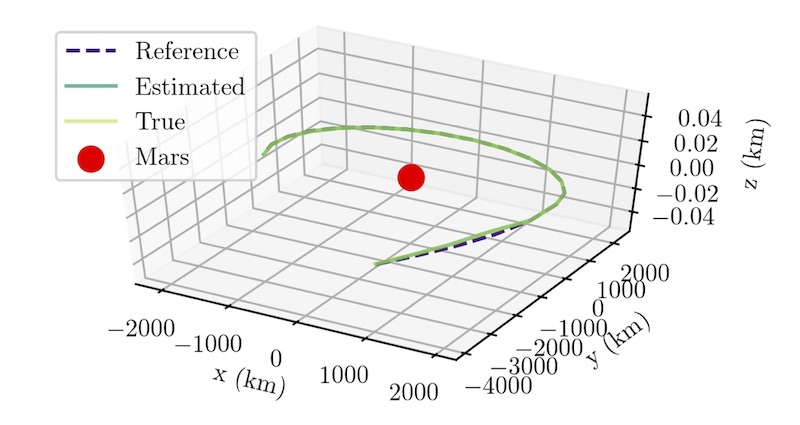

The AVS Lab is exploring approaches to fit spacecraft autonomy into the the emerging machine learning techniques, including the Partially-Observable Markov Decision Process (POMDP) framework and its general solution through model-free Deep-Q reinforcement learning. Our approach is to avoid having the machine learning techniques rediscover well-known orbit and attitude maneuver solutions, but rather to use these techniques to to provide high-level mission autonomy that can determine which flight modes are most appropriate, how to respond to failures on spacecraft. Mission scenarios are of interest where direct human intervention is not feasible such as when controlling large clusters of satellites, or engaging in an orbit insertion about another plant. All machine learning techniques require extensive learning periods where synthetic mission data is provided to learn what actions yield the best rewards. The AVS lab is integrating open machine learning tools to function with the Basilisk astrodynamics simulation framework. Basilisk not only provide very fast simulations of complex spacecraft dynamics, but it also allows for the simulation fidelity to be readily increased or decreased. Further, the Python interface enables a powerful integration with the range of Python based machine learning tools.